Surface-Level Customer Feedback is Killing Your Product

Every product team has experienced this nightmare: You build exactly what customers asked for, ship it on time, celebrate the launch, and then… crickets. Low adoption rates, flat metrics, and somehow customers are still complaining about the same problems you thought you just solved.

You did everything right. You gathered customer feedback through surveys, conducted user interviews, analyzed support tickets. You prioritized features based on what customers explicitly told you they needed. You even delivered faster than expected.

So why doesn’t anyone seem to care about what you built?

As an engineering leader, I’ve watched this pattern play out dozens of times across different teams and companies. The best teams I worked with rarely fell into this trap, while others seemed to live in it permanently. For years, I couldn’t figure out what made the difference.

Then I realized: the problem wasn’t with our execution. The problem was with our understanding.

The teams that consistently built features customers loved weren’t getting better feedback—they were getting deeper feedback. Instead of collecting what customers said they wanted, they understood the human problems behind those requests.

Let me show you what I mean…

The Tale of Two Teams

Over the years, I noticed a stark difference between teams in the same organization working on similar problems. Some teams were constantly energized, making decisions quickly, and shipping features that customers actually adopted. Others felt like they were grinding through ticket after ticket, building logically sound solutions that somehow never quite hit the mark.

The difference wasn’t talent, resources, or even leadership. Both types of teams had smart people, adequate budgets, and clear direction from management. Both followed similar processes for gathering customer feedback and defining requirements.

The high-performing teams had something else: they understood the humans behind the problems.

When these teams got a request like “we need better reporting,” they didn’t stop there. They dug deeper. They found out that Sarah in accounting spends two hours every Friday manually pulling data for her board meeting, and she’s constantly worried about accuracy because her last mistake caused a huge embarrassment in front of the executive team.

The other teams would build a reporting dashboard—exactly what was requested. It would have charts, filters, export options, everything you’d expect from “better reporting.” It was well-designed, bug-free, and delivered on schedule.

But Sarah barely used it. Why? Because the dashboard didn’t solve her real problem. She still spent two hours every Friday manually pulling data because she didn’t trust the automated reports. The dashboard looked professional, but it didn’t address her core anxiety about accuracy.

The high-performing teams took a different approach entirely. Instead of building what was requested, they built what Sarah actually needed. They realized her real problem wasn’t the lack of a dashboard—it was her fear of being wrong again. So they focused on tools that gave her confidence: an accuracy checker that validated data before she presented it, and an improved manual query system that let her verify numbers herself.

The result? Sarah became one of their biggest advocates. She started telling other accounting teams about the tool. But more importantly, they discovered there were dozens of other “Sarahs” across their customer base—people with similar workflows who shared that same anxiety about data accuracy. The feature drove real adoption and measurable business impact because it solved a human problem that many people actually had, not just a logical requirement that sounded good in meetings.

Why Smart Teams Get Stuck at the Surface

If the solution seems obvious in hindsight—just dig deeper into customer problems—why do so many smart, well-intentioned teams get trapped building features nobody uses?

The answer lies in how most organizations are set up to handle customer feedback. We’ve created systems that actively work against deep understanding.

First, there’s the feedback aggregation problem. Customer requests flow through multiple channels: support tickets, sales calls, user interviews, surveys, feature request boards. By the time this feedback reaches product teams, it’s been categorized, summarized, and abstracted. “Sarah spends two hours every Friday worried about data accuracy” becomes “customers need better reporting” somewhere along the way.

Second, we optimize for the wrong metrics. Product teams are rewarded for shipping features, not for understanding problems. When you’re measured on velocity and delivery, spending extra time to understand the human context behind a requirement feels like inefficiency. It’s faster to build the dashboard than to dig into why Sarah doesn’t trust automated reports.

Third, surface-level requirements feel safer. “Build better reporting” is clear, measurable, and defendable. If it doesn’t get adopted, you can point to the fact that you built exactly what was requested. “Build something that makes Sarah feel confident about her data” feels squishy and harder to specify.

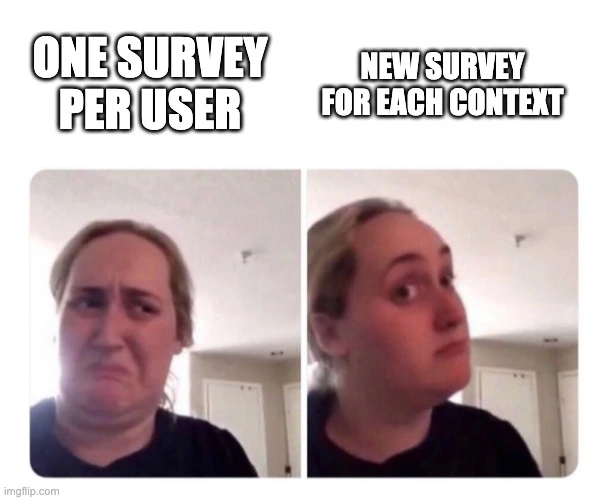

Finally, most customer research relies on the wrong questions. Surveys ask “What features do you want?” Interviews ask “What are your biggest pain points?” Both approaches are valuable, but they typically stop at the first layer of response. When someone says “better reporting,” we check the box and move on instead of asking “Walk me through the last time you needed to create a report. What was that experience like?”

The result is an entire product development ecosystem optimized for building logical solutions to abstracted problems rather than human solutions to real problems. We’re not asking bad questions because we’re using the wrong tools—we’re asking surface-level questions regardless of the tool.

The Path Forward

Here’s what keeps me up at night: somewhere right now, there’s a product team shipping a beautiful dashboard that Sarah will never use. They’re celebrating the launch, proud of the clean design and robust functionality. Meanwhile, Sarah is still spending her Friday afternoons manually pulling data, still worried about making another mistake.

The team followed every best practice. They gathered requirements, built what was requested, and delivered on time. But they solved the wrong problem, and nobody will ever tell them.

This happens thousands of times every day across product teams everywhere. Smart people building logical solutions to abstracted problems while the real human needs remain unmet.

The high-performing teams I worked with had cracked the code on something crucial: they knew how to find their Sarah. They understood that every feature request has a human story behind it, and that story contains the real requirements.

The question is: how do you systematically uncover those stories? How do you ask the right questions to get from “we need better reporting” to “I’m terrified of being wrong in front of executives again”?

That’s what we’ll explore next.